What is A/B Testing in Cold Email? Boost Reply Rates in 2025

Getting consistent results from cold outreach is not luck! It takes precise structure, testing, and insight to test successfully. Every subject line, call-to-action, and send time impacts engagement, but without data, it’s just guesswork.

That’s why A/B testing in cold email is essential for serious senders. It transforms random outreach into measurable progress, helping you refine your messaging and maximize every campaign’s impact.

This blog will break down how A/B testing works, what to test, how to analyze results. Along with these, it will also show how tools like Manyreach can help you reach more inboxes and accelerate performance through its A/Z testing.

What Is A/B Testing?

In cold outreach, two audiences will rarely respond the same way. A/B testing helps you uncover patterns in how prospects engage, letting you make confident decisions backed by real data rather than intuition.

Cold email campaigns rely on precision. You only have a few seconds to make a strong impression, and testing allows you to fine-tune that moment. Without A/B testing, teams rely on assumptions and creative guesses. With it, they rely on proof — and that difference can dramatically improve results and efficiency.

How to Perform A/B Testing with Cold Emails?

Here is how you can perform A/B testing for your cold emails. Let’s get started:

Step 1: Purchase the Right Tool

First of all, find the right tool that can accommodate 1000s of email and not hurt the deliverability. Here are a few things you should keep in mind while purchasing a cold email tool.

- A/B Testing

- Unlimited Warm-ups

- Auto-mailbox Rotations

- API Access

- Unlimited Seats

- Webhooks & Integrations

- Master Inbox

Please Note: Manyreach’s variant of A/B Testing is called “A/Z Testing”. It offers multiple testing, and email copies in one single campaign. So that you can get a powerful data-backed report to find which version is doing better.

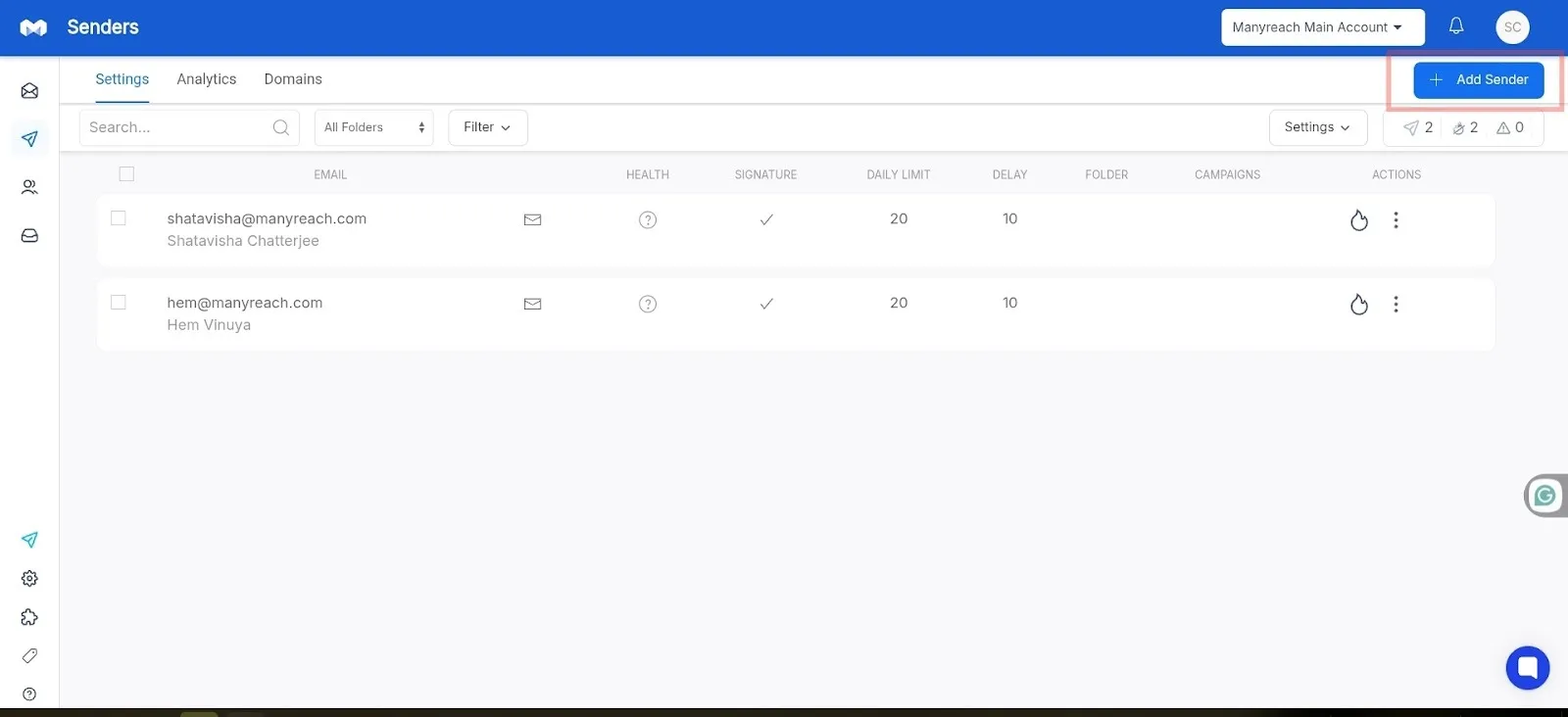

Step 2: Add Sender

After purchasing your tool, start adding senders before you send cold emails. After that is done, connect your tool to your ESP, and make sure you’ve set up your SPF, DKIM, DMARC and MX records.

Pro Tip: Don’t forget to warm up your email account before starting your campaign. Do it gradually, over a two-week period.

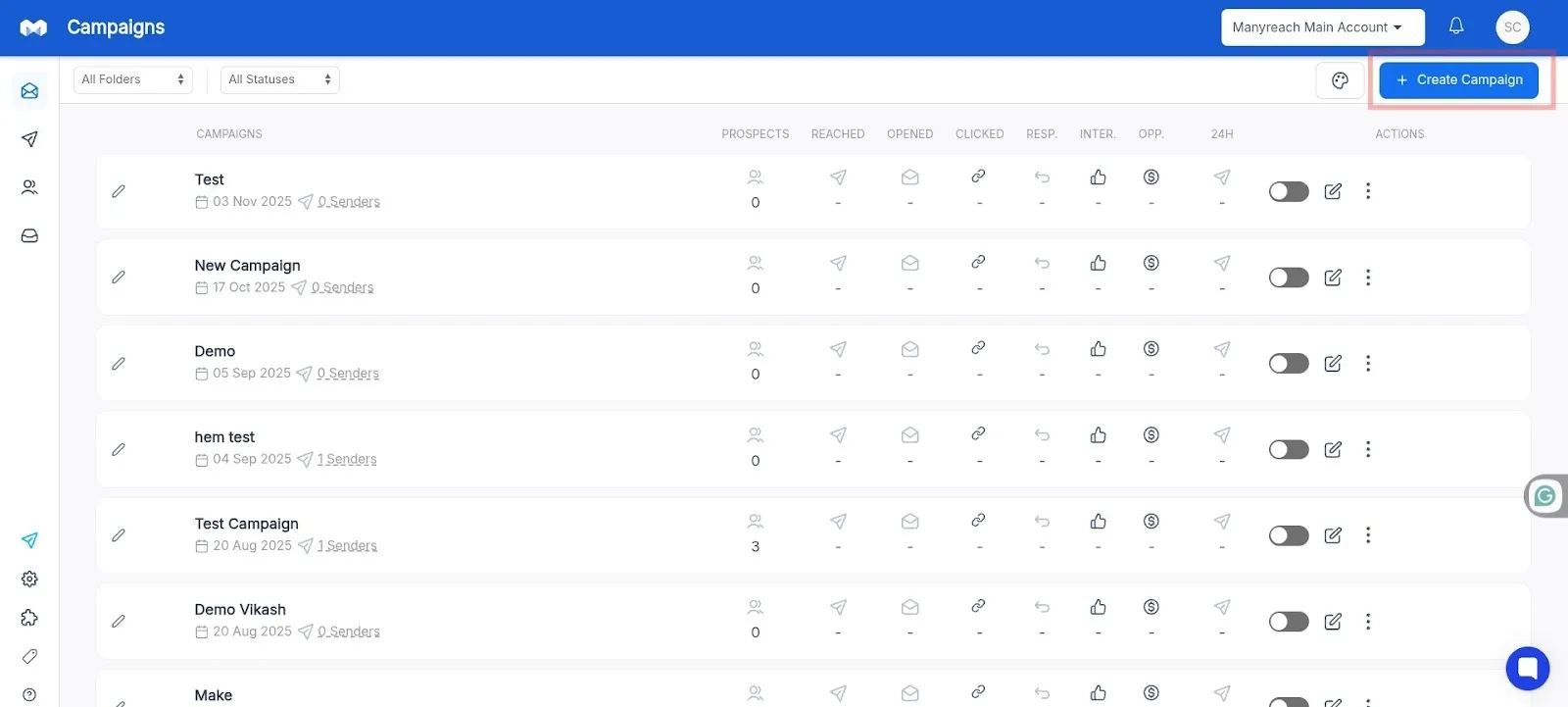

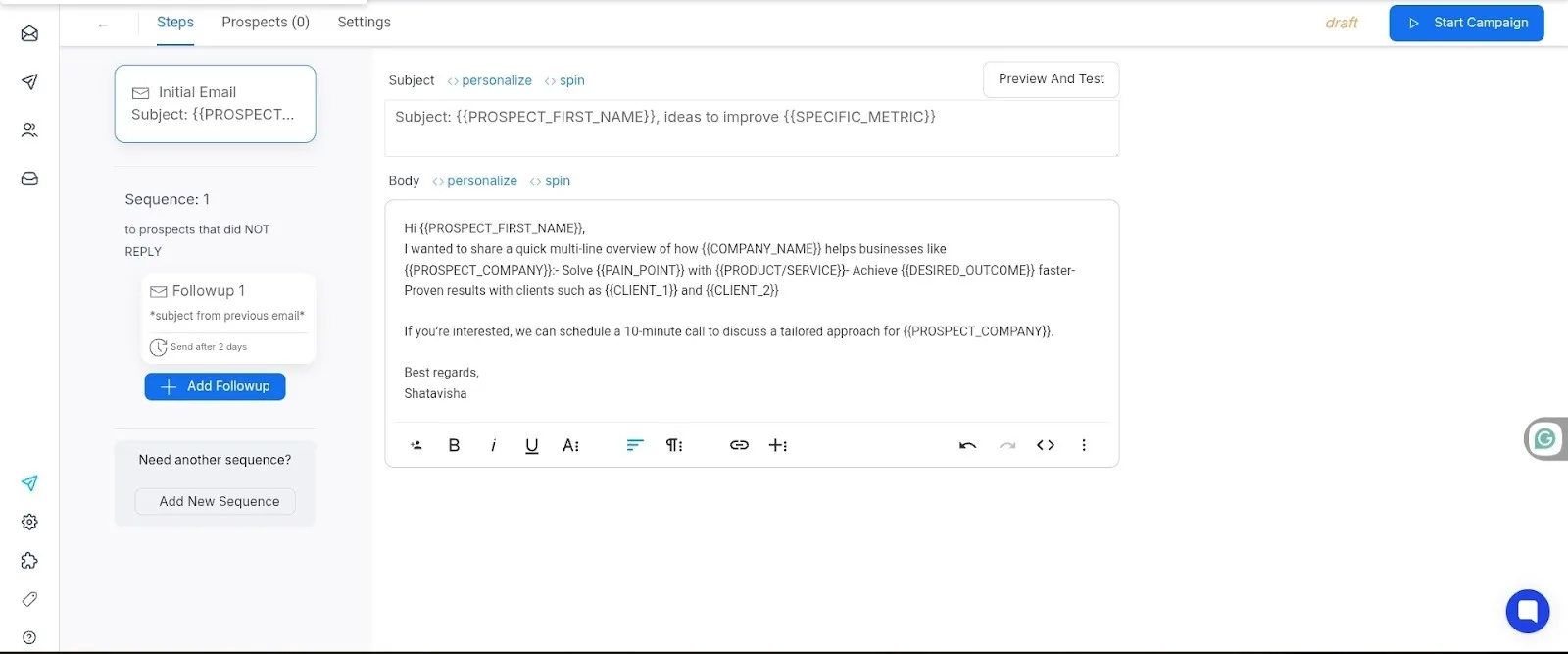

Step 3: Add Your Campaign & Add List

Once you’ve created your account, go to your campaign dashboard and find the create campaign option.

Now add email accounts, and make sure all of them are segmented and organized. Also, remember that the lead list needs to be in the CSV format.

Step 4: Write the Email

Now it’s time to write your email. Add a crisp subject line, followed by a catchy opening opening line. After that write a strong email body, and a CTA.

Step 5: A/B Test Your Email

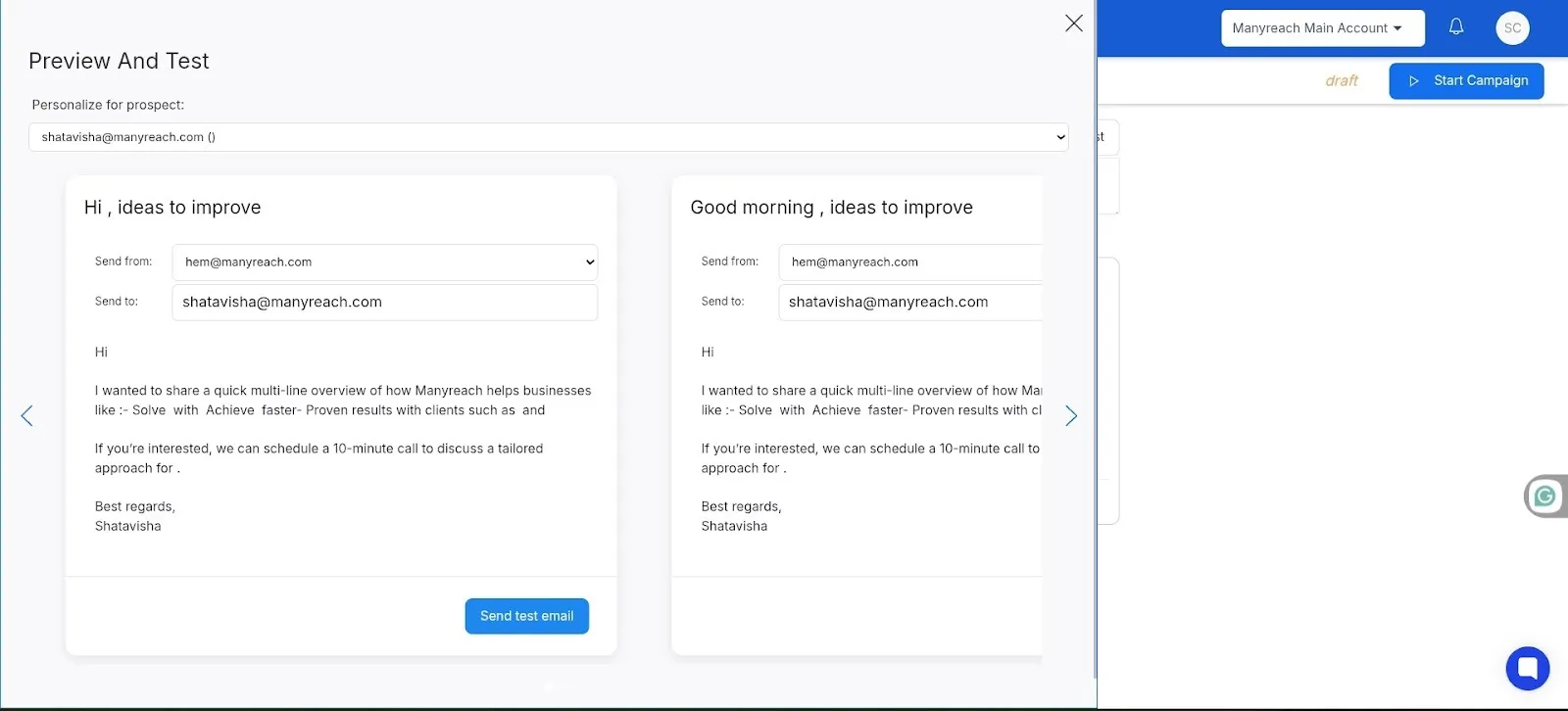

On the top right corner of your compose page, find the “Preview and Test” option. There, you’ll find a bunch of test options, and personalization options for each prospect.

Choose your variant, and send your test email.

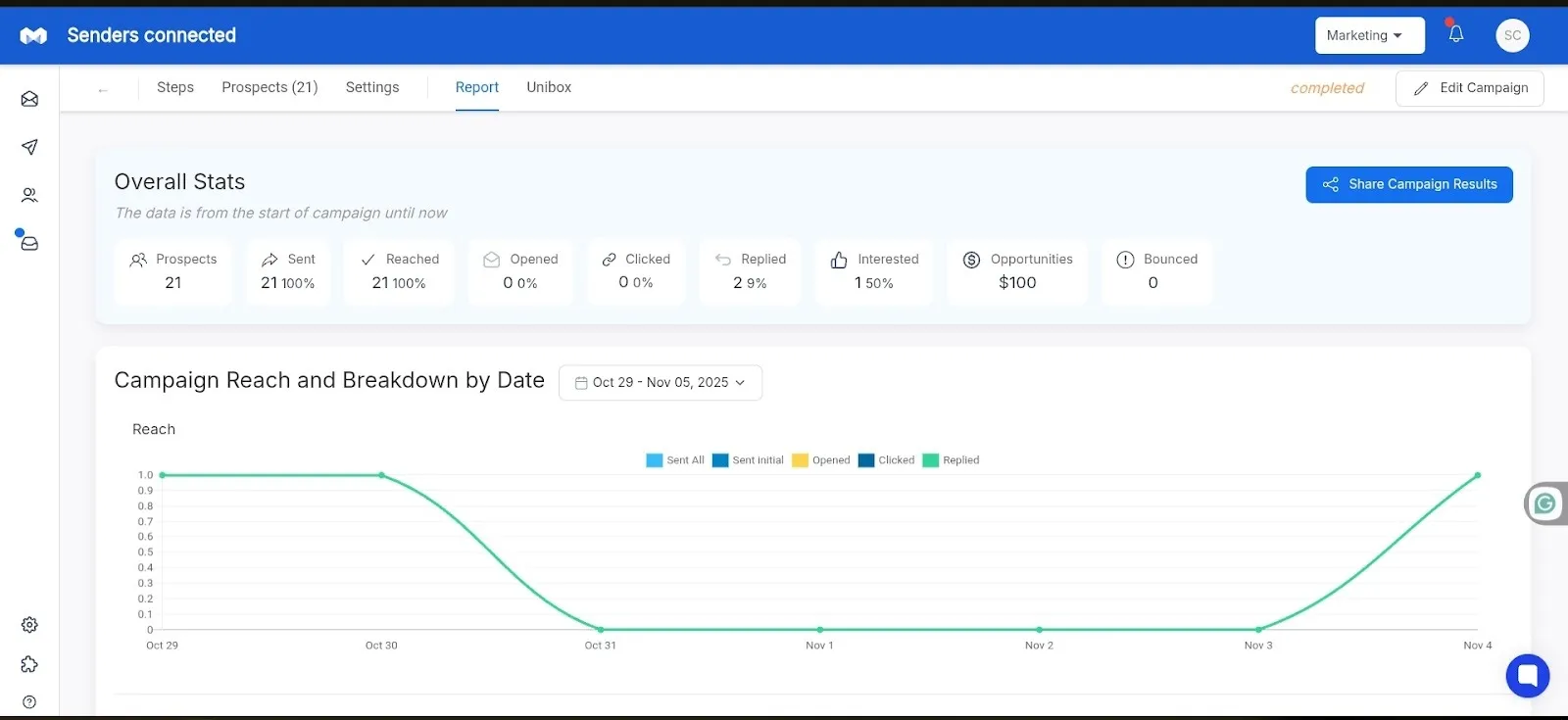

Step 6: Evaluate Metrics

Finally, keep an eye on important metrics like open rates, and click-through rates. This data will be available on your cold email tool’s analytics dashboard.

Key Factors to Consider Before A/B Testing

You need clarity and control, if you want to run a successful test. Change only one element per test, track measurable results, and maintain consistent conditions across versions to see which version works better.

Define a Clear Objective

Every A/B test starts with a specific goal. Are you trying to boost open rates, get more replies, or increase link clicks? Once you have a clear objective it will help you to determine what you test and how you measure success.

For example, if your open rates are low, your focus should be on the subject line. If replies are lacking, test the tone, personalization, or call-to-action.

Form a Testable Hypothesis

A hypothesis is your prediction about which version will perform better and why. For example:

“Personalized subject lines will outperform generic ones because they feel more relevant.”

Once you state your hypothesis upfront, you maintain focus and evaluate results more objectively.

Segment Your Audience

Divide your prospect list into two balanced segments. Both groups should share similar characteristics such as industry, company size, and location. This process will prevent external factors from skewing the results.

If one group receives mostly enterprise prospects and the other startups, your results won’t reflect the impact of your test variable; they’ll reflect audience differences.

Create Two Versions of Your Email

Always create two versions of your email.

Version A is in your control; i.e., the standard email you’ve been sending.

Version B is your variation, where one element changes. So keep everything else identical to maintain test accuracy.

For instance, if you’re testing a new subject line, make sure the body, signature, and sending schedule remain the same.

Schedule and Send Under Equal Conditions

Timing affects email performance. You have to send both versions during the same period, ideally within the same time window. So avoid testing across different days or time zones, as that can introduce bias.

Measure and Analyze Results

After sending your email, monitor your cold email’s statistics. Focus on the metric most relevant to your test:

- Open Rate is for subject line tests

- Reply Rate is for copy and CTA tests

- Click Rate is for link or offer tests

Collect enough data before you draw conclusions. Once results stabilize, identify the winning variant and record the insights, and follow the pattern.

Cold Email A/B Testing Methods

There are two types of cold email A/B testing, although, A/B testing generally means comparing two versions. You can select your method that depends on your goals and resources.

Classic A/B Testing

The classic A/B testing is the standard form of testing. Here you send two versions- A and B to similar segments of your audience and analyze performance metrics like open rate or reply rate.

It’s simple, fast, and ideal when you’re testing one variable at a time, such as a subject line or call-to-action.

Multivariate A/B Testing

For advanced senders, multivariate testing lets you experiment with multiple variables simultaneously, such as combining different subject lines with different CTAs or tones. As it delivers deeper insights, it requires larger sample sizes and more sophisticated tools to ensure valid results.

Pro Tip: If your contact list is small, stick to classic A/B testing for more accurate results. Once your outreach volume scales, move toward multivariate testing for richer insights.

Benefits of Cold Email A/B Testing

A/B testing brings structure to experimentation. So, over time, it leads into consistent improvements that increase performance across every campaign.

You can identify the elements of success and subtle touchpoints that improve engagement, helping you refine your outreach strategy with precision.

Improve Email Open Rates

A/B testing helps you determine which subject lines and preview texts drive the most open.

According to the latest stats, cold emails with first names in their preview text or subject lines, have 32.7% more chances of getting replies.

So by experimenting with personalization, tone, and length, you can understand what catches your prospects’ attention.

You can use curiosity-driven versus benefit-focused subject lines or compare personalized greetings against generic ones to uncover which approach attracts recipients to open your email more often.

Increase Click-Through Rates

Split testing helps to pinpoint the most effective CTAs and body copy that encourage click-through rates. For example, if you adjust phrasing like “Schedule a quick chat” versus “Can we connect for 10 minutes?”, it can yield measurable differences in engagement.

Boost Conversion Rates

Beyond clicks, A/B testing helps you optimize for better conversions through replies, booked meetings, or demo sign-ups. When you test variations in tone, visuals, CTA placement, and offer wording, you can discover which combination drives the best outcomes.

This data-driven refinement ensures that each iteration moves your prospects further down the funnel.

Higher Cost-Effectiveness

Testing eliminates guesswork and is highly cost-effective. Once you identify the top-performing content and discard the under-performing versions, you save time, reduce outreach fatigue, and allocate resources efficiently.

Over time, this reduces your cost per conversion and helps you get more results from the same number of sends, a true efficiency gain for any outreach team.

Reduce Bounce Rates and Improve Deliverability

Well-structured A/B tests reveal how factors like subject wording, email formatting, and send timing affect deliverability. So cleaner formatting, fewer links, and well-crafted copy can reduce spam flags and improve deliverability.

So when you consistently test and refine, you maintain stronger sender reputation scores and minimize bounce rates.

Strategic Sending Schedules Increase Reply Rates

Timing matters as much as content. So experiment with different days and hours and see when your audience is most responsive. For example, over 7.56% emails get responses during the weekdays, especially Wednesday. So sending in those times can increase your chances of getting a reply.

For example, early mornings might work best for executives, while afternoons might perform better for tech professionals.

What to Test in Your Cold Email Campaigns

Your each test should align with a measurable outcome. Focus on one area at a time for clarity and accuracy.

Here are the things you need to test before you start your outreach campaign:

Subject Lines for Better Open Rates

Your subject line helps you to find whether the email gets opened.

Test formats such as:

- Short vs. descriptive subject lines

- Personalized “{{FirstName}}, quick idea for {{Company}}” opening vs. general “Quick idea for your team” ones

- Curiosity-driven emails vs. benefit-driven emails

Email Body & CTA for Better Reply Rates

Once your prospect opens the email, the next step is retention. So test structure and tone to see what keeps attention:

- Short, direct paragraphs vs. narrative storytelling

- Soft introductions vs. problem-led openers

- Personalized pain points vs. overall industry claims

The CTA determines how the reader responds. So test the style, placement, and tone of your test emails.

Here are some examples:

- “Worth a quick 10-minute chat?” vs. “Can we connect for a short call this week?”

- CTA at the end vs. CTA after the first paragraph

- Question format vs. statement format

Testing Duration and Sample Size

Your test’s credibility depends on its sample size and duration. So, avoid running a test for too short a period as it can produce misleading results.

Choosing the Right Duration

In most cases, a 1–2 week period will provide you enough data to reach a valid conclusion. This timeframe will capture variations in recipient behavior and make sure statistical significance.

Determining Sample Size

Always aim for a few hundred sends per version. Larger sample sizes will reduce the impact of outliers and help validate your findings. If your campaign volume is low, always run the test longer to compensate.

Avoiding False Positives

Don’t stop a test early just because one version appears to be leading. Always wait until your data stabilizes, as email engagement fluctuates during the first few days. Remember that patience will bring you reliable insights.

Best Practices for A/B Testing in Cold Email Campaigns

Consistency and structure are key to trustworthy results. Here’s how to get the most from every test.

Test One Variable at a Time

Changing multiple elements at once makes it impossible to know which caused the outcome. So keep your tests simple and controlled.

Align Metrics to Goals

Choose one metric at a time to evaluate per test. For instance, if you’re testing subject lines, open rate is the only metric that will matter for that round. This practice will help you to align your metrics to goals.

Keep Conditions Consistent

Both variants should be sent from the same domain, to similar audiences, and at the same time, as changing these conditions will invalidate your results. But always avoid testing from your primary domain, as it can affect your sender reputation.

Avoid Small Changes

Testing a single punctuation mark will rarely bring you meaningful data. So always focus on changes that impact perception like tone, structure, or offer clarity.

Maintain Deliverability Standards

Do make sure that your lists are verified, your sending domains are warmed up, and your emails avoid spam triggers. Even the best A/B test will fail if your emails never reach the inbox.

Document Everything

Keep a running log of what you tested, when, and what the results were. This will become your internal knowledge base; a valuable reference for your team’s future campaigns.

Optimize Outreach with Manyreach’s A/Z Testing

In the competitive outreach landscape, knowing what to do is more important than guesswork. Here, Manyreach’s A/Z Testing goes beyond traditional A/B testing, giving you the ability to test multiple versions of your emails at once, track real results in real time.

At the same time it helps you to continuously refine what drives engagement.

Effortless Multi-Variant Test Setup

You can easily create and compare multiple variants of your test set up, with Manyreach’s A/Z Testing, whether that’s subject lines, or body content.

Each variant is automatically distributed across your audience segments under identical sending conditions, ensuring fair and reliable results every time. No manual balancing, no random discrepancies is required, just accurate performance insights.

Real-Time A/Z Performance Analytics

Manyreach gives you a unified performance analytics dashboard that tracks open rates, replies, and clicks for every variant in real time.

You can instantly see which version resonates best, identify the exact elements driving engagement, and pivot your outreach strategy based on clear, data-backed insights.

With side-by-side comparisons, you’ll always know which email will help you to convert and why.

Inbox-First Deliverability Optimization

Effective testing means nothing if your emails don’t reach the inbox.

So Manyreach’s built-in deliverability optimization, including domain health checks, sending limit controls, and spam flag monitoring ensures every A/Z test is measurable and deliverable.

This lets you test real-world outcomes, not skewed data from blocked or bounced emails.

Scalable A/Z Campaign Management

Manyreach’s A/Z Testing integrates seamlessly with your outreach workflow, whether you’re running a small batch test or a large-scale multi-step campaign.

Manage personalization, scheduling, follow-ups, and version testing from a single dashboard. It helps you to scale your campaigns without losing precision.

And once you find the winning variant, replicate and expand it effortlessly across your entire sequence.

Turn Testing into Actionable Growth

Manyreach’s A/Z Testing doesn’t just simplify experimentation, it makes your results actionable.

So instead of guessing which tweaks work, you’ll know exactly what drives engagement and conversions. Every campaign becomes better, every email more effective, and every outreach effort more impactful.

FAQs

1. What elements should I test first?

You can start with subject lines and CTAs, as they most directly influence open and reply rates. Once those improve, you can move on to personalization depth and structure.

2. How many variations should I test?

You can start with two versions, as they are ideal for clarity. More versions require larger audiences and longer testing periods.

3. How long should each test run?

Your each test should run at least one week for consistent results. And if the engagement rate patterns fluctuate, you can extend it to two weeks.

4. What if my results are inconclusive?

If the performance gap is minimal, treat it as a tie. Keep the simpler version and plan a stronger test with more noticeable differences.

5. Can I test sequences instead of single emails?

Yes you can. Sequence-level testing helps measure engagement across follow-ups, not just initial emails. It’s more advanced but offers deeper insight.

Conclusion

A/B testing in cold email turns outreach from guesswork into a repeatable, scalable process. Your emails steadily become sharper, more relevant, and more effective by testing one variable at a time, measuring results carefully, and applying what you learn.

Cold outreach doesn’t need to be unpredictable. With consistent testing and the right platform like Manyreach you can build campaigns that don’t just reach inboxes but also inspire real responses.

Through data-driven refinement, your outreach evolves from experimentation to mastery. And that’s where the true growth begins!

.webp)